EMVS: The EM Approach to Bayesian Variable Selection

Caleb Jin / 2017-12-15

Introduction

The EMVS (Rockova and George 2014) method is anchored by EM algorithm and original stochastic search variable selection (SSVS). It is a deterministic alternative to MCMC stochastic search and ideally suited for high-dimensional settings. Furthermore, EMVS is able to effectively identify the sparse high-probability model and find candidate models within a fraction of the time needed by SSVS. This algorithm makes it possible to carry out dynamic posterior exploration for the identification of posterior modes.

The outline of this course project is as follows. In section 2, we build original SSVS method, including its prior and posterior distribution for each parameter. In section 3, I derive the author’s new idea EMVS method in very details that the author omits. In section 4, I perform several simulation case studies from this paper and me, then compare the results of SSVS and EMVS.

Stochastic Search Variable Selection (SSVS)

Model Settings

Consider a multiple linear regression model as follows: where is the -dimensional response vector, is the design matrix, , is a scalar, and . Both and are considerd bo te unknown. We assume that . From (1), the likelihood function is given asThe cornerstone of is the “spike and slab” Gaussian mixture prior on , where . .

Hence, , where .

For the prior on the , I follow the (George and McCulloch 1997) and use inverse gamma prior but different notation, where we will use and , and is also independent of .

I follow the (George and McCulloch 1997) and use Bernoulli prior form but different notation, Hence, We assume where we will use , which leads to the uniform distribution.

It is easy to show that the full conditionals are as follows:

- where and .

- where and

- For , we have

where we will use , which leads to the uniform distribution. We therefore have

EMVS

Genared toward finding posterior modes of the parameter posterior rather than simulating from the entire model posterior , the EM algorithm derived here offers potentially enormous computational savings over stochastic search alternatives, especially in problems with a large number p of potential predictors.

consists of two steps: E-Step, conditional expectation given the observed data and current parameter estimates, and M-Step, entails the maximization of the expected complete data log posterior with respect to .

Define . EM algorithm maximizes by iteratively maximizing the objective function where denotes .

According to the class note, it can be written as At the th iteration, given , an E-step is first applied, which computes the expectation of the right side of (4) to obtain . This is followed by M-step, which maximizes over to obtain .

The E-Step

The E-step proceeds by computing the conditional expectations and for and , respectively.

- For ,

Hence, ,

.

Hence,

Hence,

- For , Hence, where

The M-Step

Maximize

values that maximizes in Eq.(6) is equivalent to This is quickly obtained by the well-known solution to the ridge regression problem:

Given , solve the solution of , we can easily obtain

- Maximize

Hence, we have .

Simulation Case Study

According to this paper, the author simulate a dataset consisting of observations and predictors. Predictor values for each observation were sampled from where with . Response values were then generated according to the linear model , where and with .

The R code is shown below

library(mvtnorm)

library(ggplot2)

library(reshape2)

n <- 100

p <- 1000

beta <- c(3,2,1,rep(0,p-3))

Sigma <- matrix(NA,p,p)

for (i in 1:p) {

for (j in 1:p) {

Sigma[i,j] = 0.6^(abs(i-j))

}

}

set.seed(2144)

x <- matrix(rmvnorm(n,mean = rep(0,p), sigma = Sigma),nrow = n)

y <- x%*%beta + rnorm(n,0,sqrt(3))

## prior and initial values

MC = 20

nu0 = 0.5

nu1 <- 1000

hat.theta = rep(1,MC)

hat.theta[1] = 0.5

hat.gamma <- rep(1, p)

hat.beta = matrix(NA,nrow = MC, ncol = p)

hat.beta[1,] = rep(1,p)

hat.sig2 <- rep(NA,MC)

hat.sig2[1] <- 1#mean((y - x %*% hat.beta[1,])^2)

for (i in 2:MC) {

a <- dnorm(hat.beta[i-1,],0,sd = sqrt(hat.sig2[i-1]*nu1))*(hat.theta[i-1])

b <- dnorm(hat.beta[i-1,],0,sd = sqrt(hat.sig2[i-1]*nu0))*(1-hat.theta[i-1])

p.star = a/(a+b)

D.star <- diag((1-p.star)/nu0+p.star/nu1)

D.star.5 <- diag(sqrt((1-p.star)/nu0+p.star/nu1))

hat.beta[i,] <- solve(t(x)%*%x+D.star)%*%t(x)%*%y

hat.sig2[i] <- (t(y-x%*%hat.beta[i,])%*%(y-x%*%hat.beta[i,]) +

t(D.star.5%*%hat.beta[i,])%*%(D.star.5%*%hat.beta[i,]) + 1)/(n+p+1+1)

hat.theta[i] <- sum(p.star)/(p)

# hat.theta[i] = 0.5

##################################################

## uncomment if you want to see the convergence ##

# plot(beta,hat.beta[i,], ylim = c(-0.5,3))

# abline(h = 0, col = 4)

# abline(a=0,b=1,lty=2)

# print(i)

}

data1 <- data.frame(beta=beta,hat.beta=hat.beta[MC,])

fig1 <- ggplot(data1,aes(beta,hat.beta))+

geom_point(color = 'red',size = 2,alpha = 0.7)+

ylab(expression(hat(beta)))+

xlab(expression(beta))+

ylim(c(-0.3,3)) +

ggtitle(label = 'MAP Estimate versus True Coefficients')+

geom_abline(intercept = 0, slope = 1,lty = 2)+

theme(axis.title.x = element_text(size=12),

axis.title.y = element_text(size=12),

axis.text.x = element_text(size=12),

axis.text.y = element_text(size=12))

# print hat.theta and hat.sigma

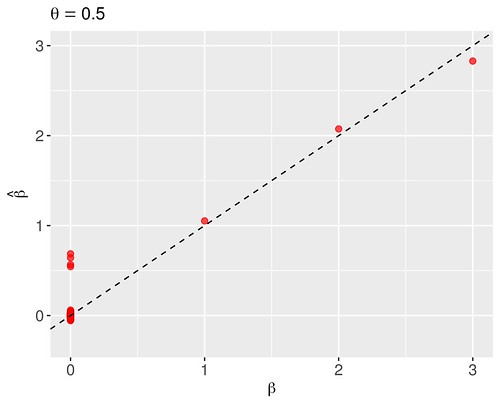

print(round(c(hat.theta[MC],sqrt(hat.sig2[MC])),4))## [1] 0.0031 0.0404Beginning with an illustration of the algorithm from the section 3, we apply it to the simulated data using the spike-and-slab prior (2) with a single value , and the . The starting values for the algorithm were set to and . After only four iterations, the algorithm obtained the modal coefficient estimates depicted in Figure 1, displaying the estimated and true . In my case, the associated modal estimates of and were 0.003 and 0.040, respectively; which are very similar to results of the paper.

For comparison, I applied the same formulation except with the Bernoulli prior (3) under fixed Figure 2. Note the inferiority of the estimates near zero due to the lack of adaptivity of the Bernoulli prior in determining the degree of underlying sparsity.

Figure 1: Modal estimates of the regression coefficients

Figure 2: Modal estimates of the regression coefficients

The effect of different and values on variable selection

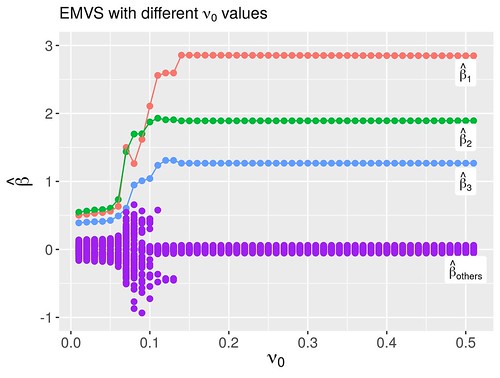

Rather than fixing as 0.5, I vary it from 0 to 0.5 to see its effect on the variable selection. I imitate the author’s method to consider the grid of values again with fixed and . Figure 3 shows the modal estimates of regression coefficients obtained for each . We can discover that only when , we can get a good estimation for the .I also consider the effect different initial values of on the variable selection. Fix and , I set the grid of where is a p-dimension vector with each value . Figure 4 depicts modal estimates of regression coefficients with different initial values of . We discover only when , we can get good estimation for the .

I also consider the effect different initial values of on the variable selection. Fix and , I set the grid of where is a p-dimension vector with each value . Figure 4 depicts modal estimates of regression coefficients with different initial values of . We discover only when , we can get good estimation for the .

Figure 3: Modal estimates of the regression coefficients

Figure 4: Modal estimates of the regression coefficients

Reference

George, Edward I., and Robert E. McCulloch. 1997. “APPROACHES for Bayesian Variable Selection.” Statistica Sinica 7 (2): 339–73. http://www.jstor.org/stable/24306083.

Rockova, Veronika, and Edward George. 2014. “EMVS: The Em Approach to Bayesian Variable Selection.” Journal of the American Statistical Association 109 (June). https://doi.org/10.1080/01621459.2013.869223.